Recently in pair programming with AI

My recent experience with GitHub Copilot Chat (non-autocomplete assistance) and Raycast’s ChatGPT-3.5 integrations lead me to think that prompting will be a crucial skill for most knowledge workers. (I’m hardly the first person to this observation!) Not quite so obtusely as using a Unix shell and command-line programs is for programmers. But, still a thing one will want to know how to get the most out of.

A few examples:

- Talking/prompting a model into doing permutations and set math. This one is still blowing my mind a little. How does a word predictor do symbolic math? Not so much a stochastic parrot in this case!

- Coaching a model to write like me, provide useful feedback, and not use the boring/hype-y voice of Twitter or whatever a model was trained on. Hitting “regenerate” a few times to get options on different prose to include. Using the model as an always-available writing partner. In particular for “help me keep going/writing here…”.

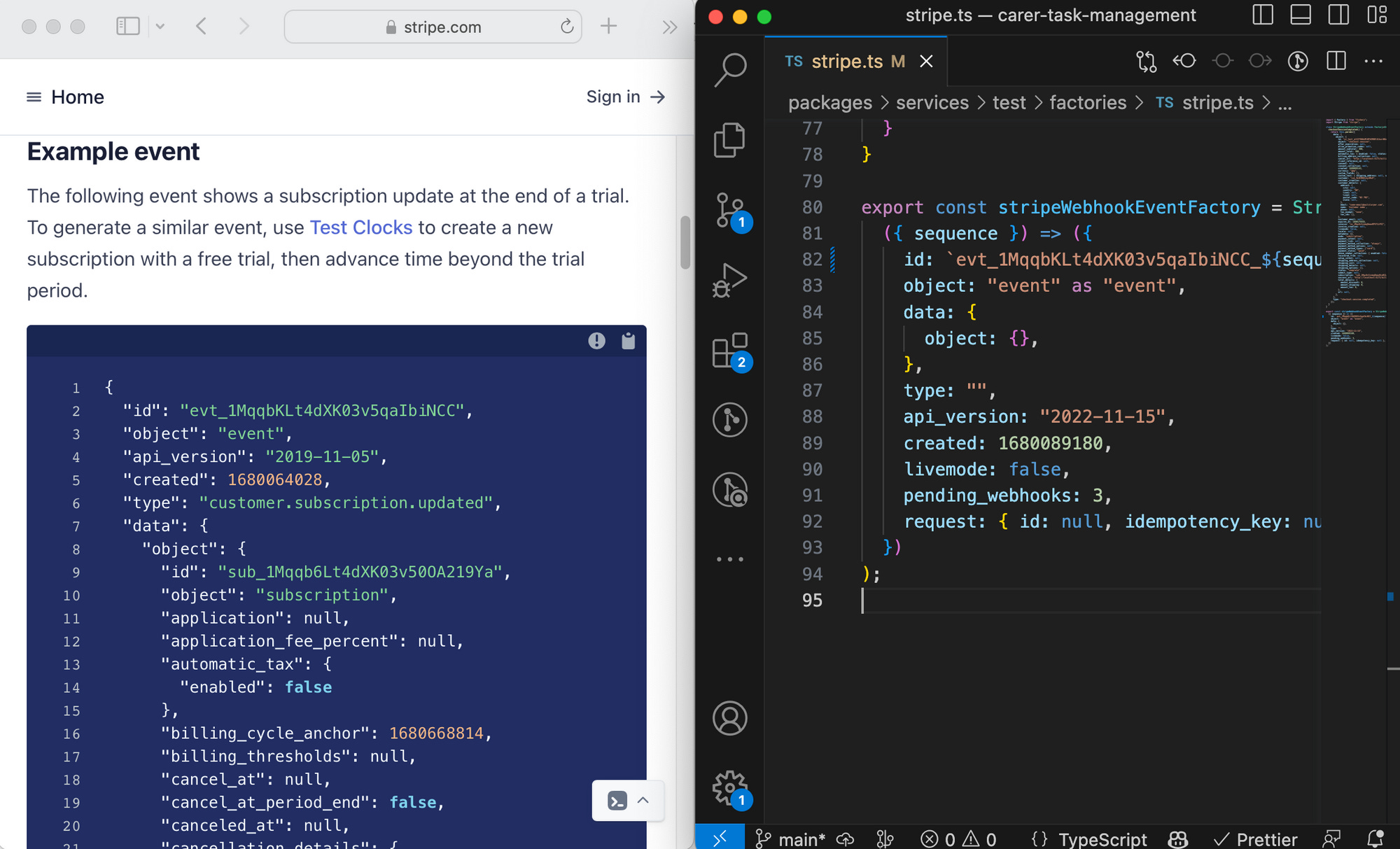

- Building up programs from scratch with the assistance of a Copilot. Incrementally improving. Asking it for references on how to understand the library in question. Asking it for ideas on how to troubleshoot the program. I’ve used this to generate the boilerplate to get started with CodeMirror, with some success.

Elsewhere

Simon Willison, Building and testing C extensions for SQLite with ChatGPT Code Interpreter:

One of the infuriating things about working with ChatGPT Code Interpreter is that it often denies abilities that you know it has.

A non-trivial share of the prompting here is to remind ChatGPT “this is C and gcc, you know this”. I am not sure whether to eye-roll or laugh. Maybe part of the system prompt is “occasionally, you’ll require a little extra encouragement, just like a less-experienced human.” Simon did manage to give ChatGPT enough courage to build the extension, though!

Despite needing to encourage the thing, this bit is promising:

Here’s the thing I enjoy most about using Code Interpreter for these kinds of prototypes: since the prompts are short, and there’s usually a delay of 30s+ between each prompt while it does its thing, I can do the whole thing on my phone while doing other things.

In this particular case I started out in bed, then got up, fed the dog, made coffee and pottered around the house for a bit—occasionally glancing back at my screen and poking it in a new direction with another prompt.

This almost doesn’t count as a project at all. It began as mild curiosity, and I only started taking it seriously when it became apparent that it was likely to produce a working result.

I only switched to my laptop right at the end, to try out the macOS compilation steps.

Ilia Choly, Semgrep: AutoFixes using LLMs:

Although the built-in autofix feature is powerful, it’s limited to simple AST transforms. I’m currently exploring the idea of fixing semgrep matches using a Large Language Model (LLM). More specifically, each match is individually fed into the LLM and replaced with the response. To make this possible, I’ve created a tool called semgrepx, which can be thought of as xargs for semgrep. I then use semgrepx to rewrite the matches using the fantastic llm tool.

I’ve yet to land a big automated refactoring generated solely by abstract syntax tree-powered refactoring tools. By extension, I definitely haven’t tried marrying AST-based mass refactoring with an LLM. But it sounds neat!